by Dr Freddy Wordingham

Web App

10. Uploading an image with the UI

We've added the backend route to accept an image, so now let's add a drag-and-drop element to the frontend to trigger it.

📦 Dependancies

We're going to need to add some more dependancies to the frontend, so we'll need to change directory into the frontend/ and use npm install:

cd frontend/

npm install --save react-dropzone

cd ..

ℹ️ We shouldn't need to add the --save positional argument, but when a friend

ran these steps without it the configuration wasn't saved into her package.json

file. And the app complained down the line. In any case, you shouldn't need it

but it doesn't hurt!

📸 ImageUpload Component

Go ahead and create a new file for our component at frontend/src/ImageUpload.tsx:

touch frontend/src/ImageUpload.tsx

And inside it add the following:

import { useCallback, useState } from "react";

import { useDropzone, FileWithPath } from "react-dropzone";

function ImageUpload() {

const [imagePreview, setImagePreview] = useState<string | null>(null);

const [predictedClass, setPredictedClass] = useState<string | null>(null);

const onDrop = useCallback((acceptedFiles: FileWithPath[]) => {

const file = acceptedFiles[0];

const reader = new FileReader();

reader.onloadend = () => {

setImagePreview(reader.result as string);

};

if (file) {

reader.readAsDataURL(file);

}

const formData = new FormData();

formData.append("file", file);

fetch("/dimensions", {

method: "POST",

body: formData,

})

.then((request) => request.json())

.then((data) => {

console.log(data);

})

.catch((error) => console.log(`Error: ${error}`));

}, []);

const { getRootProps, getInputProps, isDragActive } = useDropzone({ onDrop });

const style = {

padding: "20px",

border: isDragActive ? "2px dashed cyan" : "2px dashed gray",

};

if (imagePreview) {

return (

<div>

<img src={imagePreview} width="400px" alt="Preview" />

{predictedClass && <p>Prediction: {predictedClass}</p>}

</div>

);

}

return (

<div {...getRootProps()} style={style}>

<input {...getInputProps()} />

<div>Drop Image Here!</div>

</div>

);

}

export default ImageUpload;

From the top we've:

- Imported

useCallbackanduseStatefrom React for managing state and memoized functions:

import { useCallback, useState } from "react";

import { useDropzone, FileWithPath } from "react-dropzone";

- Create a new React component called

ImageUpload:

function ImageUpload() {

- Two state variables,

imagePreviewandpredictedClass, hold the image preview and the prediction result, respectively:

const [imagePreview, setImagePreview] = (useState < string) | (null > null);

const [predictedClass, setPredictedClass] = (useState < string) | (null > null);

- The

onDropcallback function uses the FileReader API for reading the file, converts it to base64, and updatesimagePreviewwith the data. It then performs a request to the a "/dimensions" endpoint:

const onDrop = useCallback((acceptedFiles: FileWithPath[]) => {

const file = acceptedFiles[0];

const reader = new FileReader();

reader.onloadend = () => {

setImagePreview(reader.result as string);

};

if (file) {

reader.readAsDataURL(file);

}

const formData = new FormData();

formData.append("file", file);

fetch("/classify", {

method: "POST",

body: formData,

})

.then((request) => request.json())

.then((data) => {

console.log(data);

})

.catch((error) => console.log(`Error: ${error}`));

}, []);

- We use useDropzone to make our component into a drag-and-drop zone:

const { getRootProps, getInputProps, isDragActive } = useDropzone({ onDrop });

- The style object dynamically changes the border when drag is active:

if (imagePreview) {

return (

<div>

<img src={imagePreview} width="400px" alt="Preview" />

{predictedClass && <p>Prediction: {predictedClass}</p>}

</div>

);

}

return (

<div {...getRootProps()} style={style}>

<input {...getInputProps()} />

<div>Drop Image Here!</div>

</div>

);

}

- Renders the uploaded image if available, otherwise shows the drag-and-drop zone:

export default ImageUpload;

🖼️ View image dimensions

Now we've got to update the frontend/src/App.tsx file to use our new component:

import "./App.css";

import ImageUpload from "./ImageUpload";

function App() {

return (

<div className="App">

<header className="App-header">

<ImageUpload />

</header>

</div>

);

}

export default App;

After we re-build the frontend, and launch the backend:

cd frontend

npm run build

cd ..

python -m uvicorn main:app --port 8000 --reload

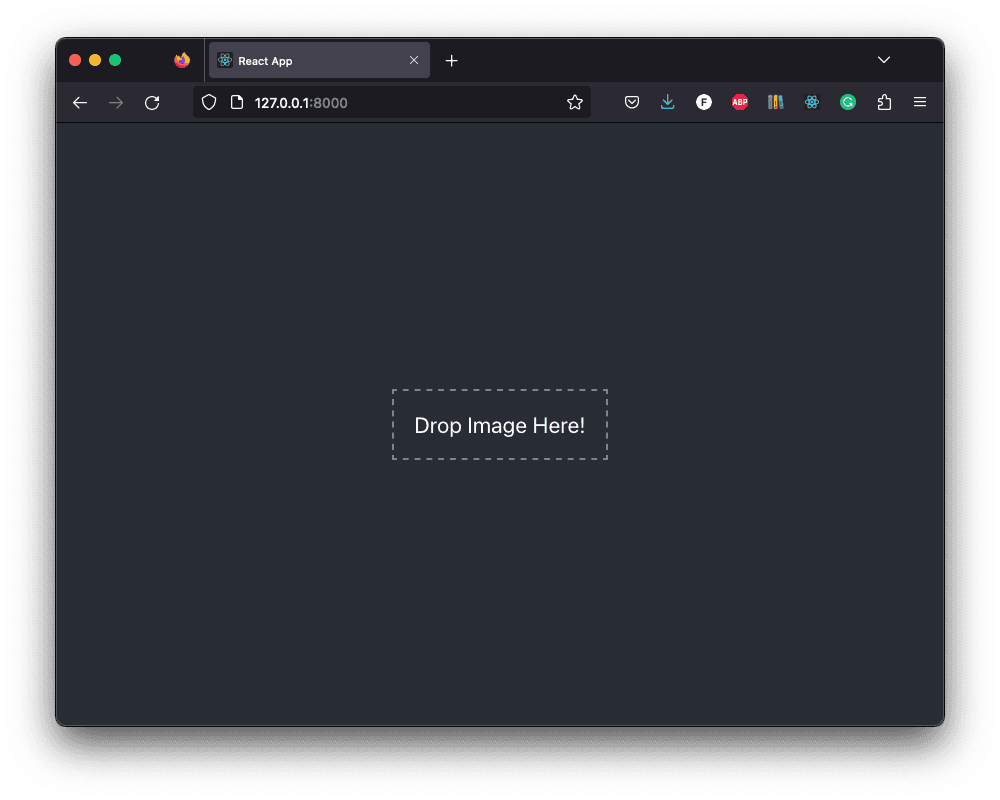

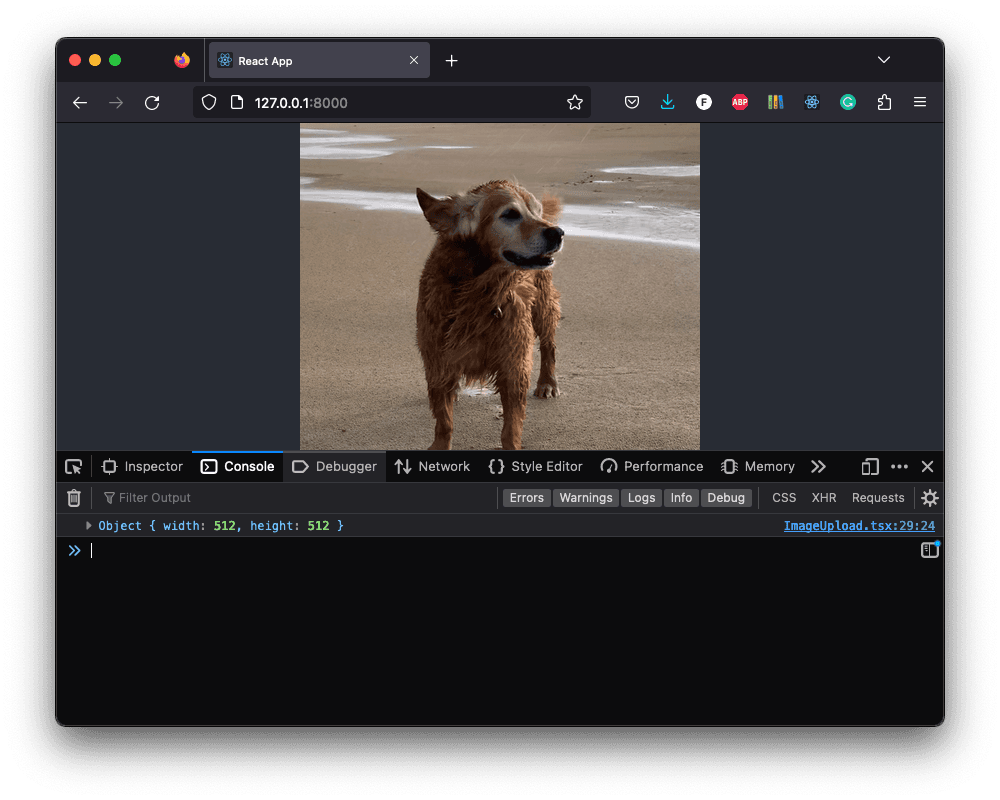

We'll be able to visit http://localhost:8000 and use the GUI to tell us the image width and height:

This information will be displayed in the console:

💡 This in a one liner:

cd frontend && npm run build && cd - && python -m uvicorn main:app --port 8000 && --reload

📑 APPENDIX

🎽 How to Run

🧱 Build Frontend

Navigate to the frontend/ directory:

cd frontend

Install any missing frontend dependancies:

npm install

Build the files for distributing the frontend to clients:

npm run build

🖲 Run the Backend

Go back to the project root directory:

cd ..

Activate the virtual environment, if you haven't already:

source .venv/bin/activate

Install any missing packages:

pip install -r requirements.txt

If you haven't already, train a CNN:

python scripts/train.py

Continue training an existing model:

python scripts/continue_training.py

Serve the web app:

python -m uvicorn main:app --port 8000 --reload

🗂️ Updated Files

Project structure

.

├── .venv/

├── .gitignore

├── resources

│ └── dog.jpg

├── frontend

│ ├── build/

│ ├── node_modules/

│ ├── public/

│ ├── src

│ │ ├── App.css

│ │ ├── App.test.tsx

│ │ ├── App.tsx

│ │ ├── ImageUpload.tsx

│ │ ├── index.css

│ │ ├── index.tsx

│ │ ├── logo.svg

│ │ ├── react-app-env.d.ts

│ │ ├── reportWebVitals.ts

│ │ ├── setupTests.ts

│ │ └── Sum.tsx

│ ├── .gitignore

│ ├── package-lock.json

│ ├── package.json

│ ├── README.md

│ └── tsconfig.json

├── output

│ ├── activations_conv2d/

│ ├── activations_conv2d_1/

│ ├── activations_conv2d_2/

│ ├── activations_dense/

│ ├── activations_dense_1/

│ ├── model.h5

│ ├── sample_images.png

│ └── training_history.png

├── scripts

│ ├── classify.py

│ ├── continue_training.py

│ └── train.py

├── main.py

├── README.md

└── requirements.txt

requirements.txt

tensorflow

matplotlib

fastapi

mangum

uvicorn

pillow

python-multipart

numpy==1.23.1

main.py

from fastapi import FastAPI, File, UploadFile

from fastapi.middleware.cors import CORSMiddleware

from fastapi.staticfiles import StaticFiles

from mangum import Mangum

from PIL import Image

from pydantic import BaseModel

from tensorflow.keras import models

import numpy as np

import os

import tensorflow as tf

# Instantiate the app

app = FastAPI()

# Ping test method

@app.get("/ping")

def ping():

return "pong!"

class SumInput(BaseModel):

a: int

b: int

class SumOutput(BaseModel):

sum: int

# Sum two numbers together

@app.post("/sum")

def sum(input: SumInput):

return SumOutput(sum=input.a + input.b)

class DimensionsOutput(BaseModel):

width: int

height: int

# Tell us the dimensions of an image

@app.post("/dimensions")

def dimensions(file: UploadFile = File(...)):

image = Image.open(file.file)

image_array = np.array(image)

width = image_array.shape[1]

height = image_array.shape[0]

return DimensionsOutput(width=width, height=height)

# Server our react application at the root

app.mount("/", StaticFiles(directory=os.path.join("frontend",

"build"), html=True), name="build")

# CORS

app.add_middleware(

CORSMiddleware,

allow_origins=["*"], # Permits requests from all origins.

# Allows cookies and credentials to be included in the request.

allow_credentials=True,

allow_methods=["*"], # Allows all HTTP methods.

allow_headers=["*"] # Allows all headers.

)

# Define the Lambda handler

handler = Mangum(app)

# Prevent Lambda showing errors in CloudWatch by handling warmup requests correctly

def lambda_handler(event, context):

if "source" in event and event["source"] == "aws.events":

print("This is a warm-ip invocation")

return {}

else:

return handler(event, context)

frontend/src/App.tsx

import "./App.css";

import ImageUpload from "./ImageUpload";

function App() {

return (

<div className="App">

<header className="App-header">

<ImageUpload />

</header>

</div>

);

}

export default App;

frontend/src/ImageUpload.tsx

import { useCallback, useState } from "react";

import { useDropzone, FileWithPath } from "react-dropzone";

function ImageUpload() {

const [imagePreview, setImagePreview] = useState<string | null>(null);

const [predictedClass, setPredictedClass] = useState<string | null>(null);

const onDrop = useCallback((acceptedFiles: FileWithPath[]) => {

const file = acceptedFiles[0];

const reader = new FileReader();

reader.onloadend = () => {

setImagePreview(reader.result as string);

};

if (file) {

reader.readAsDataURL(file);

}

const formData = new FormData();

formData.append("file", file);

fetch("/dimensions", {

method: "POST",

body: formData,

})

.then((request) => request.json())

.then((data) => {

console.log(data);

})

.catch((error) => console.log(`Error: ${error}`));

}, []);

const { getRootProps, getInputProps, isDragActive } = useDropzone({ onDrop });

const style = {

padding: "20px",

border: isDragActive ? "2px dashed cyan" : "2px dashed gray",

};

if (imagePreview) {

return (

<div>

<img src={imagePreview} width="400px" alt="Preview" />

{predictedClass && <p>Prediction: {predictedClass}</p>}

</div>

);

}

return (

<div {...getRootProps()} style={style}>

<input {...getInputProps()} />

<div>Drop Image Here!</div>

</div>

);

}

export default ImageUpload;